Whoa! I remember staring at a flashing dashboard, heart racing for a beat. My first thought was: did I miss somethin’? Then the numbers rearranged themselves and my gut said, “Nope, not good.” I froze for a second, then dove into tx traces and protocol docs, because panic is useless without data. The thing that surprised me most was not the exploit itself but how many tiny signals I had ignored for months—warnings that were subtle until they weren’t.

Okay, so check this out—I’m biased, but risk assessment in DeFi is less about finding the single fatal bug and more about collecting little contradictions. Initially I thought that on-chain audits and big-name backers were a near-guarantee, but then I realized that social engineering and oracle quirks do the heavy lifting for attackers. On one hand you can trust the math; on the other hand price feeds, governance delays, and UI mistakes often create the real windows of failure. This tension has changed how I track portfolio exposures and which tools I trust to simulate transactions.

Seriously? Yes. And here’s why simulation matters: a simulated tx surfaces slippage paths, routing quirks, and approval leaks that you rarely see in a blog post or audit summary. My instinct said “simulate everything” and then the analytics confirmed it—simulations catch edge-case routing fees and sandwich risk. But let’s not romanticize tools; they have limits, and I’m not 100% sure any single tool will save you if you ignore liquidity dynamics and market depth.

Hmm… the practical upshot is simple and a bit messy. You want a workflow, not a checklist. A workflow accepts ambiguity and makes verification routine. Step one is protocol triage—score the lending markets, AMM pools, and synthetic positions by how many moving parts they have. Step two is exposure mapping—tag which assets are stablecoin-pegged, which are LP tokens, and which are leveraged. Step three is simulation—run every big rebalancing or withdraw as a dry-run and inspect the path. And yes, you should do that every time you touch something with nontrivial TVL.

How I use tooling and why I trust certain wallets

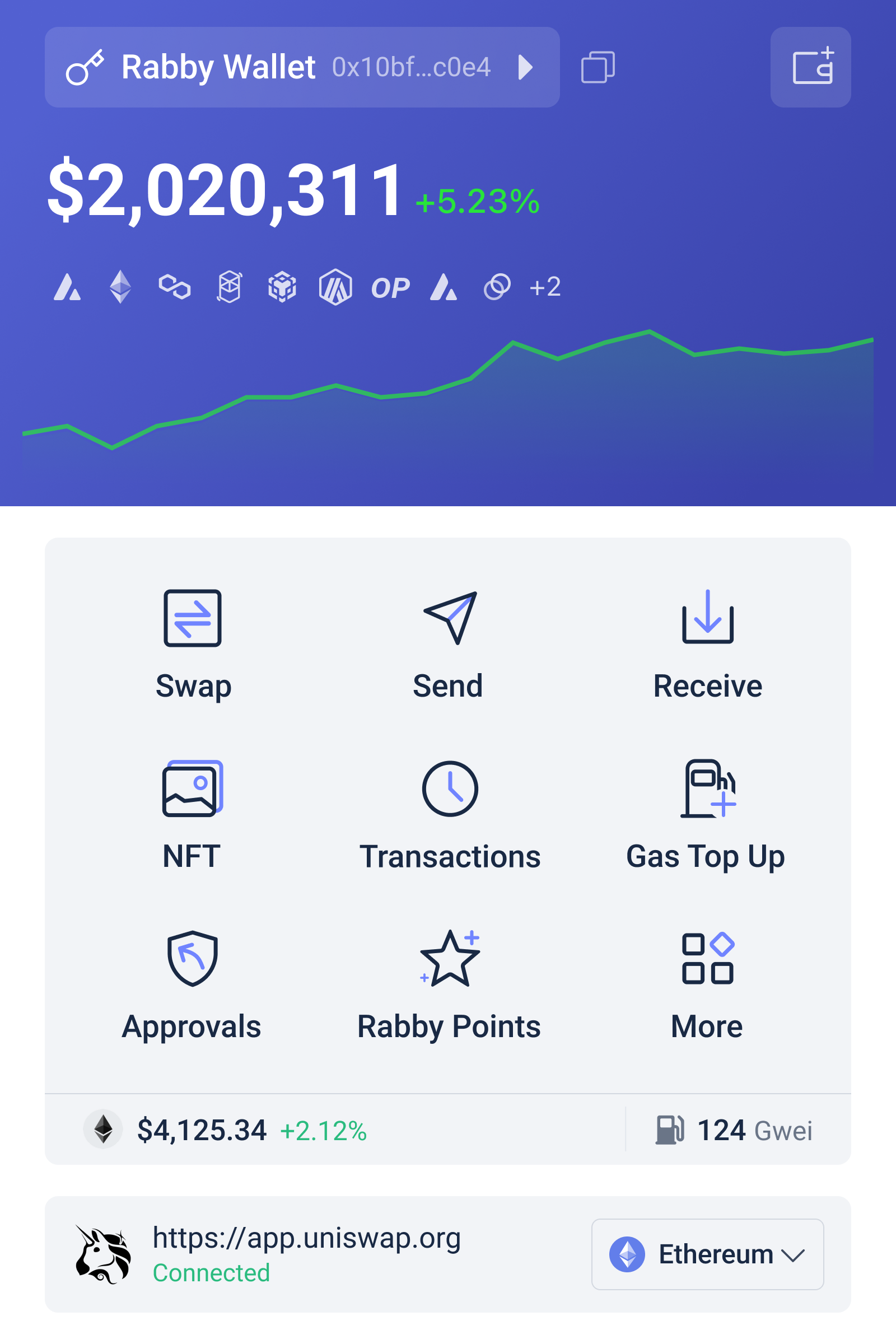

I’ll be honest: wallets matter. They’re not just key stores; they’re your last line of defense and your first UX for simulation and approvals. For me, an advanced Web3 wallet that supports transaction simulation, granular approvals, and clear origin indicators changed my decision-making. I started using a wallet that lets me preview calls and decode calldata—rabby—and that small switch made me pause before approving risky transactions far more often. There’s a comfort in seeing the exact contract calls and the token routes before anything signs off.

On the analytic side, I break risk into three buckets: systemic, protocol, and personal. Systemic covers chain-level issues and oracle integrity. Protocol is about code and game theory—governance delays, upgradeability, and permissioned modules. Personal is UX and key management: are you reusing approvals? Do you auto-sign transactions on a hot device? Those last two are where most users get bit, honestly. Oh, and by the way, approvals are tiny attack vectors that add up—very very important to clean them up.

Working through protocols requires a mix of heuristics and data. Heuristic one: ask “what could skew the price feed?” Heuristic two: look for administrative escape hatches or multisig setups you don’t understand. Heuristic three: check for unusual incentives—are liquidity providers getting paid in a separate token that could depeg? These aren’t full proofs; they’re red flags that guide deeper dives.

Initially I thought audits would be the golden ticket, but audits are snapshots. Actually, wait—let me rephrase that: audits are useful, but they’re a single chapter in a protocol’s story, not the whole book. On-chain behavior after launch—like weird governance proposals or odd treasury movements—often tells you more. So I monitor governance forums and multisig tx queues the way some people watch health metrics. It pays off.

Here’s what bugs me about dashboard-only assessments: they flatten time. Liquidity looks fine until someone unstakes a whale LP, or an algorithmic peg breaks when a major LP exits. You need to model stress scenarios, not just current balances. Run a hypothetical 30% withdrawal and inspect price impact. Run a sandwich attack simulation and measure slippage. If your wallet can simulate gas, slippage, and calldata, you get actionable intel in seconds—no guesswork.

On portfolio tracking: consolidate balances across chains and wrap them in exposure classes. Stablecoin-heavy exposure isn’t identical to true dollar exposure when there are cross-chain bridges involved. I track “bridge risk” as its own line item now: meaning if I move assets across a bridge, I assume a certain failure probability and price friction, and I size positions accordingly. This kind of probabilistic thinking isn’t perfect, but it’s better than pretending bridges are magical pipes.

Also, diversify monitoring sources. One analytic provider will miss exchange-of-last-resort behavior that another tags early. Combining chain explorers, mempool watchers, and simulation-enabled wallets gives multiple views of the same operation. It’s like having both a microscope and a radar—you want both to catch different classes of problems.

There are a few technical things I look for when assessing DeFi protocols. First, oracle design: whether it’s time-weighted averages, TWAPs, or external price oracles with guardrails. Second, upgrade paths: is governance purely on-chain with short timelocks? Third, permissioned roles: are there multisigs with single points of failure? Fourth, economic assumptions: how does the protocol react under 50% volatility? Fifth, composability risks: does an external contract rely on this protocol in a trust-minimized way? Each of these dimensions deserves a score, and combining them helps prioritize audits and simulations.

One failed assumption I had was the belief that big TVL equals safety. It felt logical—more users, more scrutiny. But actually, high TVL sometimes means a single whale can destabilize things if positioning is opaque. So now I slice TVL by concentration: what percentage is held by top 10 addresses? That number tells you whether a protocol is truly decentralized liquidity or just a few players providing depth.

Workflow example (quick, practical): before any large change to my positions I create a “preflight” folder with three artifacts. Artifact A is a simulation report showing the exact execution path and gas. Artifact B is a short risk memo: oracle design, governance delays, and permissioned roles. Artifact C is a contingency plan: how to exit and what thresholds trigger manual intervention. This sounds a bit overengineered for retail—but when you’re long on volatile LPs, it becomes necessary.

There are tools that automate parts of that workflow, and they matter because cognitive load kills good decisions. When I have to manually decode calldata or trace swaps through five routers, I delay decisions—or worse, I make a lazy approval. Automating simulation and presenting the results in a readable way reduces those lazy approvals. It also forces you to confront oddities instead of glossing over them.

One more thing—psychology matters. Herd behavior in DeFi is brutal. When a token moons, FOMO shuts down risk detectors. When it dumps, people ignore remediation because of loss aversion. My system includes a small friction: a simulated “approve and wait” step where I can’t immediately approve the live tx for a short window. It sounds silly, but forcing a pause does two things: it reduces impulse mistakes and it gives me time to re-run simulations if market conditions change. Somethin’ as simple as that saved me once.

FAQ

How often should I simulate transactions?

Every meaningful transaction. If funds moved exceed your daily tolerance, simulate. If it’s a routine small swap you do often, simulate at least weekly or when market volatility spikes.

What are the red flags when assessing a DeFi protocol?

Concentrated TVL, opaque treasury movements, short timelocks for upgrades, single-account admin keys, and reliance on fragile price oracles. If you see several of these, treat the protocol as high-risk.

Which wallet features matter most for DeFi risk management?

Transaction simulation, granular approval controls, clear contract origin metadata, and easy revocation flows. A wallet that surfaces calldata and lets you preview routing gives you a huge advantage.